I've now uploaded my Haskell photon mapper and raytracer to hackage. It is listed as a package called "crocodile" (named after my son's current obsession with crocodiles!).

It's all working on the Cornell box. It's pretty memory hungry, and has a few self-declared things to fix (eg irradiance gradients), but it's out there to play with.

Enjoy.

Games programming, graphics programming, and general software development

Tuesday 13 September 2011

Thursday 25 August 2011

This is what happens

When universities teach Java!

(some random bit of disassembled code found on my travels)

00A5CA74 EC7E1824 fdivs f3,f30,f3 56 PIPE

00A5CA78 FC406850 fneg f2,f13

00A5CA7C D0660010 stfs f3,0x10(r6)

00A5CA80 D0C90010 stfs f6,0x10(r9)

00A5CA84 D981FF60 stfd f12,-0xA0(r1) PIPE

00A5CA88 E881FF60 ld r4,-0xA0(r1) 70 (00A5CA84) LHS[01]

00A5CA8C D961FF60 stfd f11,-0xA0(r1) PIPE

00A5CA90 EBA1FF60 ld r29,-0xA0(r1) 70 (00A5CA8C) LHS[01]

00A5CA94 D941FF60 stfd f10,-0xA0(r1) PIPE

00A5CA98 EB81FF60 ld r28,-0xA0(r1) 70 (00A5CA94) LHS[01]

00A5CA9C D921FF60 stfd f9,-0xA0(r1) PIPE

00A5CAA0 EB61FF60 ld r27,-0xA0(r1) 70 (00A5CA9C) LHS[01]

00A5CAA4 D901FF60 stfd f8,-0xA0(r1) PIPE

00A5CAA8 EB41FF60 ld r26,-0xA0(r1) 70 (00A5CAA4) LHS[01]

00A5CAAC D8E1FF60 stfd f7,-0xA0(r1) PIPE

00A5CAB0 EB21FF60 ld r25,-0xA0(r1) 70 (00A5CAAC) LHS[01]

00A5CAB4 D1A6000C stfs f13,0xC(r6) PIPE

00A5CAB8 D029000C stfs f1,0xC(r9)

00A5CABC D801FF60 stfd f0,-0xA0(r1) PIPE

00A5CAC0 E801FF60 ld r0,-0xA0(r1) 70 (00A5CABC) LHS[01]

0

(some random bit of disassembled code found on my travels)

00A5CA74 EC7E1824 fdivs f3,f30,f3 56 PIPE

00A5CA78 FC406850 fneg f2,f13

00A5CA7C D0660010 stfs f3,0x10(r6)

00A5CA80 D0C90010 stfs f6,0x10(r9)

00A5CA84 D981FF60 stfd f12,-0xA0(r1) PIPE

00A5CA88 E881FF60 ld r4,-0xA0(r1) 70 (00A5CA84) LHS[01]

00A5CA8C D961FF60 stfd f11,-0xA0(r1) PIPE

00A5CA90 EBA1FF60 ld r29,-0xA0(r1) 70 (00A5CA8C) LHS[01]

00A5CA94 D941FF60 stfd f10,-0xA0(r1) PIPE

00A5CA98 EB81FF60 ld r28,-0xA0(r1) 70 (00A5CA94) LHS[01]

00A5CA9C D921FF60 stfd f9,-0xA0(r1) PIPE

00A5CAA0 EB61FF60 ld r27,-0xA0(r1) 70 (00A5CA9C) LHS[01]

00A5CAA4 D901FF60 stfd f8,-0xA0(r1) PIPE

00A5CAA8 EB41FF60 ld r26,-0xA0(r1) 70 (00A5CAA4) LHS[01]

00A5CAAC D8E1FF60 stfd f7,-0xA0(r1) PIPE

00A5CAB0 EB21FF60 ld r25,-0xA0(r1) 70 (00A5CAAC) LHS[01]

00A5CAB4 D1A6000C stfs f13,0xC(r6) PIPE

00A5CAB8 D029000C stfs f1,0xC(r9)

00A5CABC D801FF60 stfd f0,-0xA0(r1) PIPE

00A5CAC0 E801FF60 ld r0,-0xA0(r1) 70 (00A5CABC) LHS[01]

0

Wednesday 24 August 2011

Pattern matching as an optimisation method, and a means of insight

One feature of Haskell that I particularly like is its pattern-matching scheme. To route code down the correct codepath, Haskell uses pattern matching against data types and values, instead of extensive flow-control statements. This makes the code much simpler to read, as your code merely concerns itself with calling the correct functions, with the correct handler cases, and the underlying Haskell compiler deals with the flow-control minutiae.

I've increasingly found myself using a similar approach in C++ and HLSL using features such as polymorphic functions. A good example comes from optimisation: frequently, you find cases in the code where someone has written a "kitchen sink" function. This function internally handles many cases, and accordingly takes many parameters.

Frequently, many of these parameters are called with specificliteral values or constants. For example, many parameters may be zero which disable entire codepaths inside the function. For me, this is a bad code smell. But why? Many people would argue that since the code is branched away, what's the big deal? Especially if it means we can have just one definition of a function. Well, branches are very expensive on modern processors. On contemporary consoles, a floating point branch can yield a 27-cycle penalty. Not to mention all the preamble and branch set-up code.

I've therefore found myself increasingly providing multiple specialised versions of these functions, which omit some of the parameters that are permanently called with 0.0f or 1.0f and tailoring the code accordingly. Obviously this saves a lot of needless computation, branching and I$ misses.

What's interesting about this approach is that once you've performed a few of these refactorings, you start to expose patterns in the code and its structure that you previously were unaware of. You may find that your major axis of variation lies not in your chosen object hierarchy composition, but elsewhere. You may have a multitude of objects that add little value at a higher level, but now a large set of lower level functions that process the objects differently. Sometimes it makes more sense to structure and divide your code along the lines of what processing it does, not what you think the object "is", as that is the greater source of variation.

I've increasingly found myself using a similar approach in C++ and HLSL using features such as polymorphic functions. A good example comes from optimisation: frequently, you find cases in the code where someone has written a "kitchen sink" function. This function internally handles many cases, and accordingly takes many parameters.

Frequently, many of these parameters are called with specificliteral values or constants. For example, many parameters may be zero which disable entire codepaths inside the function. For me, this is a bad code smell. But why? Many people would argue that since the code is branched away, what's the big deal? Especially if it means we can have just one definition of a function. Well, branches are very expensive on modern processors. On contemporary consoles, a floating point branch can yield a 27-cycle penalty. Not to mention all the preamble and branch set-up code.

I've therefore found myself increasingly providing multiple specialised versions of these functions, which omit some of the parameters that are permanently called with 0.0f or 1.0f and tailoring the code accordingly. Obviously this saves a lot of needless computation, branching and I$ misses.

What's interesting about this approach is that once you've performed a few of these refactorings, you start to expose patterns in the code and its structure that you previously were unaware of. You may find that your major axis of variation lies not in your chosen object hierarchy composition, but elsewhere. You may have a multitude of objects that add little value at a higher level, but now a large set of lower level functions that process the objects differently. Sometimes it makes more sense to structure and divide your code along the lines of what processing it does, not what you think the object "is", as that is the greater source of variation.

Tuesday 23 August 2011

On the limitations of caching

I've been spending a little time thinking about caching lately. Irradiance caching, optimising some caching in production code, and hardware caches. Here's a little brain-dump.

I've come to the conclusion that if you ever need anything more than a trivially small cache, your algorithm is wrong. This is particularly so for parallel programming, as caches are often a difficult to handle piece of interdependent common state.

My reasoning goes a little something like this. If you are relying on a cache for performance, it is usually to re-use the results of some expensive transformation a -> b. Crucially, there needs to be 1 a to one or more b (otherwise there is no cache reuse!). For an efficient cache, you need:

I've come to the conclusion that if you ever need anything more than a trivially small cache, your algorithm is wrong. This is particularly so for parallel programming, as caches are often a difficult to handle piece of interdependent common state.

My reasoning goes a little something like this. If you are relying on a cache for performance, it is usually to re-use the results of some expensive transformation a -> b. Crucially, there needs to be 1 a to one or more b (otherwise there is no cache reuse!). For an efficient cache, you need:

- To be caching the results of a fairly complex computation,

- To have an efficient means of storing the results of the computation,

- To have a well-behaved cache indexing function.

Now, what often ends up happening is that you iterate over the many objects 'b', and populate the cache on-demand from a common ancestor piece of data, a. And here's the first problem. You are primarily iterating over the wrong dataset, and filtering and culling parts of the other dataset by proxy. You have an implicit data filtering or reduction operation that could be better handled more explicitly.

Ok, so, we switch our iteration round to iterate over the source objects 'a'. We iterate over each 'a' object, and produce the resulting 1 or more 'b' objects. At this point, do we really have a cache? We've turned our cache into a data transformation kernel. All of sudden our cache is no longer some intermediate state used in a computation, but it is now simply a piece of input data. Since we now have a piece of code transforming [a] -> [b], we have a piece of code that is very amenable to data parallelism. Since we've decoupled the code from a cache, it's also much easier to parallelise.

I think ultimately an extensive caching scheme is evidence that the data domain hasn't been adequately structured and managed. If you need a cache, you probably need more data conditioning, filtering, and reduction.

A good counter-example is the vertex stream processing unit of a modern GPU. These units read huge streams of data, transform them, and maintain minimal cached state. Vertex caches are typically miniscule in comparison to the volume of data they process. Yet a post-transform cache can often maintain a very high level of efficiency. The key to this cache's success is the pre-processing (ie structuring) of the data fed into it. It is not random. It is well conditioned, which yields efficiency at a number of levels, and enables a vertex cache to be used only as a relatively modest addition to the pipeline. The cache is the icing on the cake, not a fundamental component of the algorithm.

I think this is an important lesson when transitioning from writing single-threaded to parallel code. Don't dream up increasingly elaborate caching schemes. Instead think up separable, well-structured data-parallel transformations. When you feel the need for a cache, you probably need to carefully re-examine your data flow structure.

Tuesday 5 July 2011

Where'd all the Haskell go?

It's not gone. It'll be back.

I've recently moved house, and obviously that consumes a lot of time both before, and after the move. In order to write a Haskell article, I need to develop supporting code. I don't have much coding time right now.

On the other hand, it's pretty easy to knock out an article based on the knowledge gained during my Master's, and through my industry experience, so I'm writing on those subjects for a while.

Haskell will be back!

I've recently moved house, and obviously that consumes a lot of time both before, and after the move. In order to write a Haskell article, I need to develop supporting code. I don't have much coding time right now.

On the other hand, it's pretty easy to knock out an article based on the knowledge gained during my Master's, and through my industry experience, so I'm writing on those subjects for a while.

Haskell will be back!

Thursday 2 June 2011

Photon Mapping in Haskell

For a while I have been working on extending my Haskell raytracer to include a global illumination model. I chose to implement photon mapping, as it seemed to offer both a number of challenges, and it quite suited a functional programming approach.

Photon Tracing

One of the main challenges of implementing an algorithm like photon mapping is that it relies on a small amount of mutable state due to its monte-carlo integration. As each photon is traced through the scene, a random number is required to determine the fate of a photon for a given photon-to-material interaction. A random number generator typically requires state to iterate from one number to the next. Such state is easy to incorporate into imperative code, but it presents a challenge to the Haskell programmer.

There are two main challenges with state: management and propagation of state, and selecting what code should share a given state context. For example, in the photon mapper I could have introduced a single, global state for the random number generator. Or, I could have given each light source its own state. Or each photon. The greater scope that the state has, the greater the coupling in the code and the greater the difficulty in parallelisation.

I chose to give each photon traced its own random number generator state - and each photon had a different initial seed.

-- Realistic Image Synthesis Using Photon Mapping p60

tracePhoton :: [Photon] -> Photon -> SceneGraph -> StdGen -> (Int, Int) -> [Photon]

tracePhoton !currentPhotons (Photon !photonPower !photonPosDir) sceneGraph !rndState !(bounce, maxBounces) =

-- See if the photon intersects any surfaces

case findNearestIntersection sceneGraph ray of

Nothing -> currentPhotons

Just (obj, t, subId) -> case photonFate of

-- Diffuse reflection. Here, we store the photon that got reflected, and trace a new photon - but only if it's bright enough to be worthwhile

DiffuseReflect -> if Colour.magnitude newPhotonPower > brightnessEpsilon && (bounce + 1) <= maxBounces

then tracePhoton (storedPhoton : currentPhotons) reflectedPhoton sceneGraph rndState'' (bounce + 1, maxBounces)

else storedPhoton : currentPhotons

where

!reflectedPhoton = Photon newPhotonPower (surfacePos, reflectedDir)

!(reflectedDir, rndState'') = diffuseReflectionDirection rndState' tanSpace

-- Specular reflection. Here, we reflect the photon in the fashion that the surface would reflect towards the viewer and

-- aim to absorb it somewhere else in the photon map

SpecularReflect -> if Colour.magnitude newPhotonPower > brightnessEpsilon && (bounce + 1) <= maxBounces

then tracePhoton currentPhotons reflectedPhoton sceneGraph rndState' (bounce + 1, maxBounces)

else currentPhotons

where

!reflectedPhoton = Photon newPhotonPower (surfacePos, reflectedDir)

!reflectedDir = Vector.negate (snd photonPosDir) `reflect` normal

-- Absorb. The photon simply gets absorbed into the map

Absorb -> storedPhoton : currentPhotons

where

!(photonFate, rndState') = runState (choosePhotonFate coefficients) rndState

!coefficients = russianRouletteCoefficients (material obj)

!newPhotonPower = computeNewPhotonPower photonFate coefficients photonPower (material obj)

!tanSpace = primitiveTangentSpace (primitive obj) subId hitPosition obj

!normal = thr tanSpace

!hitPosition = pointAlongRay ray t

!surfacePos = hitPosition + (normal Vector.<*> surfaceEpsilon)

!brightnessEpsilon = 0.1

!storedPhoton = Photon photonPower (surfacePos, snd photonPosDir)

where

!ray = rayWithPosDir photonPosDir 10000

KD-Tree Construction

The resulting list of photons is inserted into a KD tree in order to efficiently locate the set of photons near to a point in space. This is a simple recursive operation:

buildKDTree :: [Photon] -> PhotonMapTree

buildKDTree photons

| length photons == 1 = PhotonMapLeaf (head photons)

| length photons >= 2 = let (boxMin, boxMax) = photonsBoundingBox photons

!axis = largestAxis (boxMax - boxMin)

!photonsMedian = foldr ((+) . fst . posDir) zeroVector photons Vector. fromIntegral (length photons)

!value = component photonsMedian axis

photonsGT = Prelude.filter (\x -> component ((fst . posDir) x) axis > value) photons

photonsLE = Prelude.filter (\x -> component ((fst . posDir) x) axis <= value) photons

in if length photonsGT > 0 && length photonsLE > 0

then PhotonMapNode axis value (buildKDTree photonsGT) (buildKDTree photonsLE)

else let (photons0', photons1') = trace "Using degenerate case" $ degenerateSplitList photons in PhotonMapNode axis value (buildKDTree photons0') (buildKDTree photons1')

| otherwise = error ("Invalid case, length of array is " ++ show (length photons) ++ "\n")

KD-Tree Query

The photon mapping algorithm uses an interesting combination of a kd tree and max-heap to locate only the closest N photons to a point. The max-heap is sorted on the squared distance to a photon. This means the most distant photons are stored towards the top of the max-heap, making them easy to discard.

Traversal of the tree commences with a point of interest and a radius. Any photons found within that radius are added to the max-heap. If the max-heap exceeds its specified size then excess, distant photons are dropped from the top of the heap. Since the max-heap stores the most distant photon at the top of the heap, it is easy to monitor the current-furthest photon and tighten our search radius during traversal.

-- Use a max heap to make it easy to eliminate distant photons

data GatheredPhoton = GatheredPhoton Float Photon deriving (Show)

type PhotonHeap = MaxHeap GatheredPhoton

instance Ord GatheredPhoton where

compare (GatheredPhoton dist1 _) (GatheredPhoton dist2 _)

| dist1 == dist2 = EQ

| dist1 <= dist2 = LT

| otherwise = GT

instance Eq GatheredPhoton where

(GatheredPhoton dist1 _) == (GatheredPhoton dist2 _) = dist1 == dist2

minimalSearchRadius !rSq !photonHeap = case viewHead photonHeap of

Nothing -> rSq

Just (GatheredPhoton dSq _) -> Prelude.min rSq dSq

-- Gather photons for irradiance computations

-- Algorithm adapted from Realistic Image Synthesis Using Photon Mapping p73

gatherPhotons :: PhotonMapTree -> Position -> Float -> PhotonHeap -> Int -> PhotonHeap

gatherPhotons (PhotonMapNode !axis !value gtChild leChild) !pos !rSq !photonHeap !maxPhotons

-- In this case, the split plane bisects the search sphere - search both halves of tree

| (value - posComponent) ** 2 <= rSq = let heap1 = gatherPhotons gtChild pos rSq' photonHeap maxPhotons

rSq'' = minimalSearchRadius rSq' heap1

heap2 = gatherPhotons leChild pos rSq'' photonHeap maxPhotons

newHeap = union heap1 heap2

in Data.Heap.drop (size newHeap - maxPhotons) newHeap

-- One side of the tree...

| posComponent > value = gatherPhotons gtChild pos rSq' photonHeap maxPhotons

-- ... or the other

| posComponent <= value = gatherPhotons leChild pos rSq' photonHeap maxPhotons

-- Prolapse

| otherwise = error "gatherPhotons: unexplained/unexpected case here"

where

!posComponent = component pos axis

!rSq' = minimalSearchRadius rSq photonHeap -- Refine search radius as we go down tree to search no further than closest allowed photon

gatherPhotons (PhotonMapLeaf !p) !pos !rSq !photonHeap !maxPhotons

| distSq < rSq = let newHeap = insert (GatheredPhoton distSq p) photonHeap

in Data.Heap.drop (size newHeap - maxPhotons) newHeap -- Discard any excess photons - we get rid of the furthest ones

| otherwise = photonHeap

where !distSq = pos `distanceSq` (fst . posDir) p

Photon Tracing

One of the main challenges of implementing an algorithm like photon mapping is that it relies on a small amount of mutable state due to its monte-carlo integration. As each photon is traced through the scene, a random number is required to determine the fate of a photon for a given photon-to-material interaction. A random number generator typically requires state to iterate from one number to the next. Such state is easy to incorporate into imperative code, but it presents a challenge to the Haskell programmer.

There are two main challenges with state: management and propagation of state, and selecting what code should share a given state context. For example, in the photon mapper I could have introduced a single, global state for the random number generator. Or, I could have given each light source its own state. Or each photon. The greater scope that the state has, the greater the coupling in the code and the greater the difficulty in parallelisation.

I chose to give each photon traced its own random number generator state - and each photon had a different initial seed.

-- Realistic Image Synthesis Using Photon Mapping p60

tracePhoton :: [Photon] -> Photon -> SceneGraph -> StdGen -> (Int, Int) -> [Photon]

tracePhoton !currentPhotons (Photon !photonPower !photonPosDir) sceneGraph !rndState !(bounce, maxBounces) =

-- See if the photon intersects any surfaces

case findNearestIntersection sceneGraph ray of

Nothing -> currentPhotons

Just (obj, t, subId) -> case photonFate of

-- Diffuse reflection. Here, we store the photon that got reflected, and trace a new photon - but only if it's bright enough to be worthwhile

DiffuseReflect -> if Colour.magnitude newPhotonPower > brightnessEpsilon && (bounce + 1) <= maxBounces

then tracePhoton (storedPhoton : currentPhotons) reflectedPhoton sceneGraph rndState'' (bounce + 1, maxBounces)

else storedPhoton : currentPhotons

where

!reflectedPhoton = Photon newPhotonPower (surfacePos, reflectedDir)

!(reflectedDir, rndState'') = diffuseReflectionDirection rndState' tanSpace

-- Specular reflection. Here, we reflect the photon in the fashion that the surface would reflect towards the viewer and

-- aim to absorb it somewhere else in the photon map

SpecularReflect -> if Colour.magnitude newPhotonPower > brightnessEpsilon && (bounce + 1) <= maxBounces

then tracePhoton currentPhotons reflectedPhoton sceneGraph rndState' (bounce + 1, maxBounces)

else currentPhotons

where

!reflectedPhoton = Photon newPhotonPower (surfacePos, reflectedDir)

!reflectedDir = Vector.negate (snd photonPosDir) `reflect` normal

-- Absorb. The photon simply gets absorbed into the map

Absorb -> storedPhoton : currentPhotons

where

!(photonFate, rndState') = runState (choosePhotonFate coefficients) rndState

!coefficients = russianRouletteCoefficients (material obj)

!newPhotonPower = computeNewPhotonPower photonFate coefficients photonPower (material obj)

!tanSpace = primitiveTangentSpace (primitive obj) subId hitPosition obj

!normal = thr tanSpace

!hitPosition = pointAlongRay ray t

!surfacePos = hitPosition + (normal Vector.<*> surfaceEpsilon)

!brightnessEpsilon = 0.1

!storedPhoton = Photon photonPower (surfacePos, snd photonPosDir)

where

!ray = rayWithPosDir photonPosDir 10000

KD-Tree Construction

The resulting list of photons is inserted into a KD tree in order to efficiently locate the set of photons near to a point in space. This is a simple recursive operation:

buildKDTree :: [Photon] -> PhotonMapTree

buildKDTree photons

| length photons == 1 = PhotonMapLeaf (head photons)

| length photons >= 2 = let (boxMin, boxMax) = photonsBoundingBox photons

!axis = largestAxis (boxMax - boxMin)

!photonsMedian = foldr ((+) . fst . posDir) zeroVector photons Vector. fromIntegral (length photons)

!value = component photonsMedian axis

photonsGT = Prelude.filter (\x -> component ((fst . posDir) x) axis > value) photons

photonsLE = Prelude.filter (\x -> component ((fst . posDir) x) axis <= value) photons

in if length photonsGT > 0 && length photonsLE > 0

then PhotonMapNode axis value (buildKDTree photonsGT) (buildKDTree photonsLE)

else let (photons0', photons1') = trace "Using degenerate case" $ degenerateSplitList photons in PhotonMapNode axis value (buildKDTree photons0') (buildKDTree photons1')

| otherwise = error ("Invalid case, length of array is " ++ show (length photons) ++ "\n")

KD-Tree Query

The photon mapping algorithm uses an interesting combination of a kd tree and max-heap to locate only the closest N photons to a point. The max-heap is sorted on the squared distance to a photon. This means the most distant photons are stored towards the top of the max-heap, making them easy to discard.

Traversal of the tree commences with a point of interest and a radius. Any photons found within that radius are added to the max-heap. If the max-heap exceeds its specified size then excess, distant photons are dropped from the top of the heap. Since the max-heap stores the most distant photon at the top of the heap, it is easy to monitor the current-furthest photon and tighten our search radius during traversal.

-- Use a max heap to make it easy to eliminate distant photons

data GatheredPhoton = GatheredPhoton Float Photon deriving (Show)

type PhotonHeap = MaxHeap GatheredPhoton

instance Ord GatheredPhoton where

compare (GatheredPhoton dist1 _) (GatheredPhoton dist2 _)

| dist1 == dist2 = EQ

| dist1 <= dist2 = LT

| otherwise = GT

instance Eq GatheredPhoton where

(GatheredPhoton dist1 _) == (GatheredPhoton dist2 _) = dist1 == dist2

minimalSearchRadius !rSq !photonHeap = case viewHead photonHeap of

Nothing -> rSq

Just (GatheredPhoton dSq _) -> Prelude.min rSq dSq

-- Gather photons for irradiance computations

-- Algorithm adapted from Realistic Image Synthesis Using Photon Mapping p73

gatherPhotons :: PhotonMapTree -> Position -> Float -> PhotonHeap -> Int -> PhotonHeap

gatherPhotons (PhotonMapNode !axis !value gtChild leChild) !pos !rSq !photonHeap !maxPhotons

-- In this case, the split plane bisects the search sphere - search both halves of tree

| (value - posComponent) ** 2 <= rSq = let heap1 = gatherPhotons gtChild pos rSq' photonHeap maxPhotons

rSq'' = minimalSearchRadius rSq' heap1

heap2 = gatherPhotons leChild pos rSq'' photonHeap maxPhotons

newHeap = union heap1 heap2

in Data.Heap.drop (size newHeap - maxPhotons) newHeap

-- One side of the tree...

| posComponent > value = gatherPhotons gtChild pos rSq' photonHeap maxPhotons

-- ... or the other

| posComponent <= value = gatherPhotons leChild pos rSq' photonHeap maxPhotons

-- Prolapse

| otherwise = error "gatherPhotons: unexplained/unexpected case here"

where

!posComponent = component pos axis

!rSq' = minimalSearchRadius rSq photonHeap -- Refine search radius as we go down tree to search no further than closest allowed photon

gatherPhotons (PhotonMapLeaf !p) !pos !rSq !photonHeap !maxPhotons

| distSq < rSq = let newHeap = insert (GatheredPhoton distSq p) photonHeap

in Data.Heap.drop (size newHeap - maxPhotons) newHeap -- Discard any excess photons - we get rid of the furthest ones

| otherwise = photonHeap

where !distSq = pos `distanceSq` (fst . posDir) p

Parallelisation

The photon tracing stage is trivially easy to parallelise, provided that you have carefully treated the shared state required by the random number generator. Since I have provided each traced photon with it's own state, which is uniquely initialised, all photons are data independent and therefore easy to trace in parallel.

I have not yet parallelised the photon gathering stage, due to some issues with the current implementation's efficiency. Two options present themselves for parallelisation. The first is to parallelise the traversal of high-level sub-branches of the KD tree. This naive approach would yield some speedup, but the work of each thread is likely to be highly unbalanced.

Speculatively, a work-stealing queue type approach may help here. The high-level nodes to be traversed could be initially inserted into a queue. When a worker thread pulls data, it could compare how many nodes remain to be traversed against the total number of workers. If the ratio of nodes:workers becomes very low, nodes could be repeatedly removed from the queue and replaced with their child nodes, until the ratio becomes favourable. This would populate the queue with a fairly heterogenous mixture of large and small amounts of work to be done, which would help fill up and balance processor time across the threads.

Results

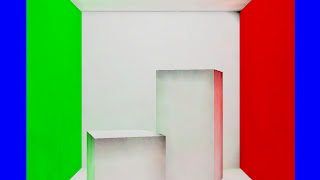

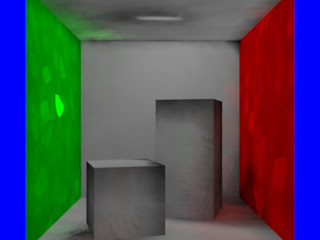

Here is the current output image, with all options cranked up:

The code requires refinement and tuning to eliminate the bias, improve the colour bleeding and fix a few minor artefacts.

The photon mapper is currently very slow. It can take hours on my MacBook Pro to trace a scene containing 100,000 photons, and that is a very small number of photons.

Most of the time is spent in the gathering phase. The current implementation is very space intensive and requires further serious attention. The photon emission and tracing step, however, is extremely quick, requiring only a few seconds to trace 100,000 photons.

Code

Full source code is available on github:

https://github.com/TomHammersley/HaskellRenderer

Future Work

I am now working to improve the efficiency of the gathering phase which will improve efficiency and development speed. I am also implementing an irradiance cache to further optimise execution speed. Clearly various minor bugs also need addressing.

References

Further implementation details can be found primarily in Henrik Wann Jensen's photon mapping book, supplemented by various SIGGRAPH course materials.

Sunday 15 May 2011

Haskell Renderer Source Code on Github

Just a quick note to let anyone interested that the source code to my work-in-progress Haskell raytracer and photon mapper is now available at:

https://github.com/TomHammersley/HaskellRenderer

I'm currently mid way through debugging and optimising the photon map solution. It works, though it takes 10 minutes or so on my MacBook Pro.

When run it will output the following image:

To build the program you just need the latest Haskell Platform and the bmp package from cabal.

I'm still a beginner at Haskell so some of the code may not represent the most idiomatic or best practice Haskell. If anyone has any advice, comments or constructive criticism, I'm keen to hear it at tomhammersley@gmail.com.

(I plan to make the package Cabal compatible soon).

Cheers.

https://github.com/TomHammersley/HaskellRenderer

I'm currently mid way through debugging and optimising the photon map solution. It works, though it takes 10 minutes or so on my MacBook Pro.

When run it will output the following image:

To build the program you just need the latest Haskell Platform and the bmp package from cabal.

I'm still a beginner at Haskell so some of the code may not represent the most idiomatic or best practice Haskell. If anyone has any advice, comments or constructive criticism, I'm keen to hear it at tomhammersley@gmail.com.

(I plan to make the package Cabal compatible soon).

Cheers.

Monday 11 April 2011

Designs That Leak State: Using Haskell Notation to Analyse C++ Design

I came across an interesting design failure today. In short, a C++ object was internally storing the state specific to a piece of multi-threaded client code. The client code was leaking its state data into the C++ object. In generalised, abstracted terms:

class Object

{

Real member variables

...

unsigned char m_state1[MaxContexts];

Each of the threads would read from 'Object', process it, and store the results in one of the elements of m_state.

It's a bad pattern, but I daresay a common pattern.

What is wrong here?

The first thing to notice is the series of member variables, all arrays, sized by MaxContexts. A series of variables like this is a dead giveaway that really there is semantically some concept in the system that is not explicitly represented in the code. Given that we are trying to represent an object in a series of "contexts", with different values for each, it suggests that these variables are not properties of the object, but actually variables of some other, auxiliary state. If there is logically one of something, it's a member variable of the class in question. If an object embeds a number of them, it's probably someone else's variable.

Fundamentally, my problem with this is that we're embedding some client's data within the object itself. When the client code operates on the object, it is no longer a pure transformation of one object into another, it is now a conceptually more complex, confusing transformation of one object, to the same object with new state.

This additional state presents serious problems to multi-threading a piece of code. When foreign state is embedded unnecesarily in an object like this it makes it much more difficult to manage the ownership, lifetime and correctness of data. All the other clients of the Object are burdened with helping manage the state of some other system. Appropriate ownership, coupling, and lifetime of data is all-important in parallel programming.

Our problem is that we have inverted the "has-a" relationship. The object does not "have a" state in a number of contexts. In reality, the contexts have the state and they "have", or refer to the object.

Haskell Function Signature Analysis

I increasingly find that Haskell's function signature notation is a lucid yet lightweight means of analysing data flow. There are many other alternative, successful methods, yet I find the Haskell analysis direct and natural. These days I find myself thinking directly in these terms to understand data transformations.

In Haskell terms, we have:

We're not sure! We would prefer:

Usage of State

The next question is, how is this state really used? It is not really part of an object's update function, as the multiple contexts suggest the data is not part of the object. So, what is the client code of the object doing with the state? It doesn't really matter. Whatever it's doing, we can infer that:

a) The state is a product of the object. If it were not, it would not be in the object.

b) We have multiple contexts, implying multiple passes over the data.

When a piece of state is the product of an object, it is likely that it is intermediate data. Given that we expect multiple passes over the data, we're going to be caching a lot of pieces of state data that have nothing to do with the context in question. We could switch the state representation from SoA to AoS, but it's still data in the wrong place.

Let's say we have 10 'contexts' and 100 objects. Let's say the object is 20 bytes, and the 'state' is 6 byte. We have a total data volume of 100 * (20 + 6 * 10) bytes, of which 100 * (20 + 6) bytes is used. Out of the total data volume, we're therefore using only 33% of the total data volume.

So, if we switch from the:

[Object] -> [Object]

implementation to a

[Object] -> [State]

implementation, we now have 100 * 20 + 100 * 6 bytes total data volume and 100% of the data is used.

Where is the data going?

The previous transformation is essentially a map operation. We're applying a function a list of Objects, and returning a list of State objects. A state is not an endpoint of a data processing; it implies that further processing is going to use the data.

Returning to Haskell, let's say our original state-producing function is f:

state = map f objects

Something else, g, is going to use that data:

output = map g (map f objects)

Here's our first big clue. All that data that originally lived inside the object, most likely on the heap, is really just intermediate data polluting the cache. It's just input to a succeeding function. We can most likely eliminate that "state" data altogether and pass the output directly through to the succeeding function. Or, we can store the state in some piece of intermediate thread-local storage. Either way, we've now got a clearer understanding of the real data flow in the program.

Eliminating the containing Object

The next question becomes, which bits of the Object really contribute to the new output State?

As the State is the product of an Object, and Object is non-trivial it is unlikely that the State is a product of the entire Object. It is probably some subset of member variables.

To use something of a straw man example, suppose the code in question is a visibility operation. Suppose that Object holds a bounding volume, some geometry, and the State holds various visibility test results, distances, LODs and the likely. Our transformation is therefore more likely to be:

BoundingVolume -> LodDescription -> State

Most of the Object's data is not used here. We've passed a subset of the Object's data to some function. This is a naive pattern in C++ code. Useful, general, common patterns of code are often hidden behind a facade of encapsulation. Unrelated pieces of data (in a given context) are brought in unnecessarily. When we develop a semi-abstract purely functional description of the operation, we can build a much clearer understanding of the data flow and dependencies.

Now imagine rewriting and structuring this operation in C++ based on this understanding of the data. Arguably an experienced programmer can arrive directly at the same conclusion, but I find the Haskell notation a useful intermediate tool for reasoning about data flows.

class Object

{

Real member variables

...

unsigned char m_state1[MaxContexts];

float m_state2[MaxContexts];

bool m_state3[MaxContexts];

};Each of the threads would read from 'Object', process it, and store the results in one of the elements of m_state.

It's a bad pattern, but I daresay a common pattern.

What is wrong here?

The first thing to notice is the series of member variables, all arrays, sized by MaxContexts. A series of variables like this is a dead giveaway that really there is semantically some concept in the system that is not explicitly represented in the code. Given that we are trying to represent an object in a series of "contexts", with different values for each, it suggests that these variables are not properties of the object, but actually variables of some other, auxiliary state. If there is logically one of something, it's a member variable of the class in question. If an object embeds a number of them, it's probably someone else's variable.

Fundamentally, my problem with this is that we're embedding some client's data within the object itself. When the client code operates on the object, it is no longer a pure transformation of one object into another, it is now a conceptually more complex, confusing transformation of one object, to the same object with new state.

This additional state presents serious problems to multi-threading a piece of code. When foreign state is embedded unnecesarily in an object like this it makes it much more difficult to manage the ownership, lifetime and correctness of data. All the other clients of the Object are burdened with helping manage the state of some other system. Appropriate ownership, coupling, and lifetime of data is all-important in parallel programming.

Our problem is that we have inverted the "has-a" relationship. The object does not "have a" state in a number of contexts. In reality, the contexts have the state and they "have", or refer to the object.

Haskell Function Signature Analysis

I increasingly find that Haskell's function signature notation is a lucid yet lightweight means of analysing data flow. There are many other alternative, successful methods, yet I find the Haskell analysis direct and natural. These days I find myself thinking directly in these terms to understand data transformations.

In Haskell terms, we have:

f :: Object -> Object

Or possible even:

f :: Object -> (Object, State)

We're not sure! We would prefer:

f :: Object -> State

Usage of State

The next question is, how is this state really used? It is not really part of an object's update function, as the multiple contexts suggest the data is not part of the object. So, what is the client code of the object doing with the state? It doesn't really matter. Whatever it's doing, we can infer that:

a) The state is a product of the object. If it were not, it would not be in the object.

b) We have multiple contexts, implying multiple passes over the data.

When a piece of state is the product of an object, it is likely that it is intermediate data. Given that we expect multiple passes over the data, we're going to be caching a lot of pieces of state data that have nothing to do with the context in question. We could switch the state representation from SoA to AoS, but it's still data in the wrong place.

Let's say we have 10 'contexts' and 100 objects. Let's say the object is 20 bytes, and the 'state' is 6 byte. We have a total data volume of 100 * (20 + 6 * 10) bytes, of which 100 * (20 + 6) bytes is used. Out of the total data volume, we're therefore using only 33% of the total data volume.

So, if we switch from the:

[Object] -> [Object]

implementation to a

[Object] -> [State]

implementation, we now have 100 * 20 + 100 * 6 bytes total data volume and 100% of the data is used.

Where is the data going?

The previous transformation is essentially a map operation. We're applying a function a list of Objects, and returning a list of State objects. A state is not an endpoint of a data processing; it implies that further processing is going to use the data.

Returning to Haskell, let's say our original state-producing function is f:

state = map f objects

Something else, g, is going to use that data:

output = map g (map f objects)

Or in terms of function application:

g . f

Here's our first big clue. All that data that originally lived inside the object, most likely on the heap, is really just intermediate data polluting the cache. It's just input to a succeeding function. We can most likely eliminate that "state" data altogether and pass the output directly through to the succeeding function. Or, we can store the state in some piece of intermediate thread-local storage. Either way, we've now got a clearer understanding of the real data flow in the program.

Eliminating the containing Object

The next question becomes, which bits of the Object really contribute to the new output State?

As the State is the product of an Object, and Object is non-trivial it is unlikely that the State is a product of the entire Object. It is probably some subset of member variables.

To use something of a straw man example, suppose the code in question is a visibility operation. Suppose that Object holds a bounding volume, some geometry, and the State holds various visibility test results, distances, LODs and the likely. Our transformation is therefore more likely to be:

BoundingVolume -> LodDescription -> State

Most of the Object's data is not used here. We've passed a subset of the Object's data to some function. This is a naive pattern in C++ code. Useful, general, common patterns of code are often hidden behind a facade of encapsulation. Unrelated pieces of data (in a given context) are brought in unnecessarily. When we develop a semi-abstract purely functional description of the operation, we can build a much clearer understanding of the data flow and dependencies.

Now imagine rewriting and structuring this operation in C++ based on this understanding of the data. Arguably an experienced programmer can arrive directly at the same conclusion, but I find the Haskell notation a useful intermediate tool for reasoning about data flows.

Thursday 7 April 2011

Ray-Triangle Intersection in Haskell

Recently, I've been experimenting with different ray-triangle intersection algorithms. There are many alternatives out there, and many more optimisations and special cases, but I was looking for an version that ran quickly, and "suited" functional programming.

I settled on the half-planes solution. This algorithm defines a plane at each edge of the triangle by crossing the triangle's normal and the edge direction. To check if a triangle is inside the triangle, you simply check to see if a point (on the plane of the triangle) is on the same side of all of those planes.

This costs a little extra storage space, but it's a very fast test.

So. How does the code pan out? Well, pointInsideTriangle is simple enough:

distanceToPlane :: Shape -> Vector -> Float

distanceToPlane (Plane !norm !dist) !pos = pos `dot3` norm + dist

distanceToPlane _ _ = undefined

pointInsideTriangle :: Triangle -> Position -> Bool

pointInsideTriangle !tri !point = foldr (&&) True $ map (\pln -> (distanceToPlane pln point) >= 0) (halfPlanes tri)

I settled on the half-planes solution. This algorithm defines a plane at each edge of the triangle by crossing the triangle's normal and the edge direction. To check if a triangle is inside the triangle, you simply check to see if a point (on the plane of the triangle) is on the same side of all of those planes.

This costs a little extra storage space, but it's a very fast test.

So. How does the code pan out? Well, pointInsideTriangle is simple enough:

distanceToPlane :: Shape -> Vector -> Float

distanceToPlane (Plane !norm !dist) !pos = pos `dot3` norm + dist

distanceToPlane _ _ = undefined

pointInsideTriangle :: Triangle -> Position -> Bool

pointInsideTriangle !tri !point = foldr (&&) True $ map (\pln -> (distanceToPlane pln point) >= 0) (halfPlanes tri)

This is best read right-to-left. The map applies a lambda function to each of the half planes. This function simply returns a bool, telling us if a point is on the correct side of a triangle. This yields a list of bools. We need to turn that into a single result; this is done using a fold to reduce many values to a single Bool. I've deliberately used a right-fold so that the fold lazily consumes only the necessary parts of the list. It can early-out.

So there we have it. One line of Haskell (with a couple of helper functions) to do the test.

The rest of the Haskell code for a ray-triangle intersection is pretty straightforward:

intersectRayTriangle :: Ray -> Object -> Triangle -> Bool -> Maybe (Float, Triangle)

intersectRayTriangle !ray !obj !triangle !doubleSided

| doubleSided == False && (direction ray) `dot3` (normal $ plane triangle) > 0 = Nothing

| otherwise = case shapeClosestIntersect (plane triangle) ray obj of

Nothing -> Nothing

Just (dist', _) -> if pointInsideTriangle triangle (pointAlongRay ray dist')

then Just (dist', triangle)

else Nothing

Here we have a simple guard condition to permit backface culling. If the triangle is not double-sided, we do a backface culling test and possibly reject it. If not, we fall through to the default case and intersect the ray against the plane, and then test that intersection point for containment in the triangle.

Intersecting against a list is an interesting case. A simple version would just map against the list, and then attempt to find the closest intersection out of the resulting list. However, if we use tail recursion, we can add a parameter to hold our current "state" and therefore maintain a current-closest intersection. This eliminates a second search-the-list step, and also permits an early-reject optimisation:

intersectRayTriangleList :: [Triangle] -> Int -> Maybe (Float, Int) -> Ray -> Object -> Maybe (Float, Int)

intersectRayTriangleList !(x:xs) !index !currentResult !currentRay !obj = intersectRayTriangleList xs (index + 1) newResult newRay obj

where

(newRay, newResult) = case intersectRayTriangle currentRay obj x False of

Nothing -> (currentRay, currentResult)

Just (dist, _) -> (shortenRay currentRay dist, Just (dist, index))

intersectRayTriangleList [] _ !currentResult _ _ = currentResult

The pattern of "searching a list of x and return the closest" occurs frequently in raytracing. This is a potential candidate to be factored out into a re-usable function, or possibly even a monad.

The resulting code is also quite efficient. I'm now running at less than a minute for my test scene of the Cornell Box with 8x8 distributed raytracing. This is 30% quicker than my previously Möller-Trumbore ray-triangle intersection test.

Sunday 3 April 2011

How to Parallelise A Haskell Raytracer

One of the big attractions of functional languages is that they're theoretically very easily to parallelise as they have no problematic side-effects You only describe how to perform a calculation, and never touch on the details of moving data around the machine. The run-time is then free to process data, given your functional expressions, in parallel.

I've been experimenting with Haskell's basic parallelism primitives, par, and pseq. These primitives trigger ("spark") evaluation of expressions in parallel - but only if the runtime deems it necessary.

After many failed or mildly successful experiments, I've finally managed to get a speedup. In short, I stopped trying to reinvent the wheel myself. I simply used parListChunk:

rayTraceImage :: Camera -> Int -> Int -> SceneGraph -> [Light] -> [Colour]

rayTraceImage camera renderWidth renderHeight sceneGraph lights = map (clamp . tracePixel eyePosition sceneGraph lights) rayDirections `using` parListChunk 256 rseq

where !rayDirections = [makeRayDirection renderWidth renderHeight camera (x, y) | y <- [0..(renderHeight - 1)], x <- [0..(renderWidth - 1)]]

!eyePosition = Camera.position camera

Clearly, the nature of the functional approach surrenders a lot of low-level implementation details to your platform's runtime. However, it is more frequently the case that when you require such low-level intervention, you are working around deficiencies in your run-time platform, rather than dealing with fundamental algorithm details. Parallelising many algorithms follows established patterns.

It is also worth noting that the raytracer code is currently pretty straightforward code. I've not implemented any particular tricky optimisations, and certainly none of the ideas in work such as Ingo Wald's parallel raytracing PhD thesis. Not yet, anyway...

I've been experimenting with Haskell's basic parallelism primitives, par, and pseq. These primitives trigger ("spark") evaluation of expressions in parallel - but only if the runtime deems it necessary.

After many failed or mildly successful experiments, I've finally managed to get a speedup. In short, I stopped trying to reinvent the wheel myself. I simply used parListChunk:

rayTraceImage :: Camera -> Int -> Int -> SceneGraph -> [Light] -> [Colour]

rayTraceImage camera renderWidth renderHeight sceneGraph lights = map (clamp . tracePixel eyePosition sceneGraph lights) rayDirections `using` parListChunk 256 rseq

where !rayDirections = [makeRayDirection renderWidth renderHeight camera (x, y) | y <- [0..(renderHeight - 1)], x <- [0..(renderWidth - 1)]]

!eyePosition = Camera.position camera

Here, we break the list of rays to be traced into data parallel chunks of 256, each free to be evaluated by a different thread. Each of these chunks is processed using map. Map applies the raytracing function to convert a ray direction into a colour, and we clamp the result.

And that's it.

Running it on two threads on my dual-core MacBook Pro halves the execution time.

Running it on two threads on my dual-core MacBook Pro halves the execution time.

What's nice is that the core 'map' expression is separated from the details of parallelisation. We've separated the concepts of raytracing and its parallel decomposition into two separate concerns. We are therefore free to vary either without intertwining their concerns.

Not a critical section, lock-free queue or atomic operation in sight!

Clearly, the nature of the functional approach surrenders a lot of low-level implementation details to your platform's runtime. However, it is more frequently the case that when you require such low-level intervention, you are working around deficiencies in your run-time platform, rather than dealing with fundamental algorithm details. Parallelising many algorithms follows established patterns.

It is also worth noting that the raytracer code is currently pretty straightforward code. I've not implemented any particular tricky optimisations, and certainly none of the ideas in work such as Ingo Wald's parallel raytracing PhD thesis. Not yet, anyway...

Thursday 24 March 2011

Laziness vs Strictness in Haskell

I've just taken over a minute of execution time off my raytracer.

One of Haskell's biggest language features is the lazy evaluation system. By default, Haskell evaluates nearly all expressions lazily, as needed. This is one of the features that drew me to Haskell, and indeed it seems a reasonably apt way of writing a raytracer. Raytracing often consists of selectively traversing different parts of large databases for different parts of the scene. Many acceleration schemes involve simple mechanisms to lazily evaluate results.

Laziness, however, does pose an overhead. Haskell introduces a "thunk" for every lazy expression. A thunk describes how to calculate a deferred expression when required. For some expressions, a thunk is a potential time saver: you can defer work until needed, calculate the required value, then re-use it. For other things, such as simple arithmetic operations like vector addition, a thunk is an overhead often comparable to the original operation.

Haskell offers a couple of ways to avoid thunks and therefore lazy evalation. The main two I use are the BangPatterns extension to explicitly mark something as strictly (ie, non-lazily) evaluated, and seq.

The BangPatterns extension allows you to mark expressions that you want to be explicitly evaluated with a !. So, for a Vector, you would write Vector !Float !Float !Float !Float. This way, you force anything you assign to a vector to be strictly evaluated and stored in the vector.

Seq is used to strictly force the evaluation of a function. Seq has the signature of a -> b -> a. It therefore takes two parameters, and returns the second.

An interesting example of where lazy evaluation proven to be an overheard, rather than a win was my light accumulation function. Given a point in space to be shaded, this accumulates the contribution of every light source. Now, the inputs to this function are going to be different every time this function is called as every ray intersection is going to be unique. Thunks will therefore present an additional overhead.

I rewrote my function to use seq. I use seq to strictly evaluate the application of this function, then process the remainder of the list:

accumulateLight :: [Light] -> Colour -> SceneGraph -> Vector -> Vector -> Material -> Vector -> Colour

accumulateLight (x:xs) acc sceneGraph shadePos normal objMaterial viewDirection = let result = acc + (applyLight sceneGraph shadePos normal objMaterial viewDirection x)

in seq result (accumulateLight xs result sceneGraph shadePos normal objMaterial viewDirection)

accumulateLight [] acc _ _ _ _ _ = acc

One of Haskell's biggest language features is the lazy evaluation system. By default, Haskell evaluates nearly all expressions lazily, as needed. This is one of the features that drew me to Haskell, and indeed it seems a reasonably apt way of writing a raytracer. Raytracing often consists of selectively traversing different parts of large databases for different parts of the scene. Many acceleration schemes involve simple mechanisms to lazily evaluate results.

Laziness, however, does pose an overhead. Haskell introduces a "thunk" for every lazy expression. A thunk describes how to calculate a deferred expression when required. For some expressions, a thunk is a potential time saver: you can defer work until needed, calculate the required value, then re-use it. For other things, such as simple arithmetic operations like vector addition, a thunk is an overhead often comparable to the original operation.

Haskell offers a couple of ways to avoid thunks and therefore lazy evalation. The main two I use are the BangPatterns extension to explicitly mark something as strictly (ie, non-lazily) evaluated, and seq.

The BangPatterns extension allows you to mark expressions that you want to be explicitly evaluated with a !. So, for a Vector, you would write Vector !Float !Float !Float !Float. This way, you force anything you assign to a vector to be strictly evaluated and stored in the vector.

Seq is used to strictly force the evaluation of a function. Seq has the signature of a -> b -> a. It therefore takes two parameters, and returns the second.

An interesting example of where lazy evaluation proven to be an overheard, rather than a win was my light accumulation function. Given a point in space to be shaded, this accumulates the contribution of every light source. Now, the inputs to this function are going to be different every time this function is called as every ray intersection is going to be unique. Thunks will therefore present an additional overhead.

I rewrote my function to use seq. I use seq to strictly evaluate the application of this function, then process the remainder of the list:

accumulateLight :: [Light] -> Colour -> SceneGraph -> Vector -> Vector -> Material -> Vector -> Colour

accumulateLight (x:xs) acc sceneGraph shadePos normal objMaterial viewDirection = let result = acc + (applyLight sceneGraph shadePos normal objMaterial viewDirection x)

in seq result (accumulateLight xs result sceneGraph shadePos normal objMaterial viewDirection)

accumulateLight [] acc _ _ _ _ _ = acc

This change alone saved over a minute of execution time! Since a raytracer generally consists of many similar kinds of loops, I am now searching for wider application of this technique. It's a very difficult call to make with a limited data set. Some functions may be faster if strictly evaluated with a small dataset, but may perform better if evaluated lazily with a larger dataset.

Profiling will guide me.

Wednesday 23 March 2011

Pragmatism vs Idealism (DOD vs OOP)

So, it seems no blog these days is complete without a yet another stab at the DOD vs OOP argument.

Here's my take.

I could have picked any number of dichotomy based titles for this post. Objective vs Subjective. Pragmatism vs Idealism. Design vs Performance. PC vs Console. There is a tendency to view DOD and OOP as somewhat polar extremes that you are either for, or against. Personally, I see merit in both camps. It is something of a false dichotomy. I see value in the ideals of OOP and DOD. Equally, I see shortcomings in both camps. For me, it's not an either/or, it's a question of how you blend them together.

For me, the real key issue is choosing what approach to adopt for a given task. This is where the real challenge lies.

The arguments used to support OOP are essentially based on intangible qualities. Design. Re-use. Extensibility. Maintainability. Elegance. Whilst valuable, these are generally somewhat subjective qualities. What constitutes good design is based on widely held best practices, and those practices are based on a consensus of constantly evolving expert opinion. It's very hard to measure these things explicitly. It's very hard to say how much "better" a more OOP centric approach can make a piece of code.

The arguments for DOD, by contrast, are based on real-world, objective data: performance. You can directly measure performance, and directly state the improvement that a DOD approach has yielded for a given task. Similarly, you can measure the cost of an OOP based approach in terms of lost performance. Performance has a useful, marginal benefit in a software project. Other qualities have their benefits but are frequently less directly felt.

You therefore end up with a challenging situation. How can the intangible qualities that support OOP argue against the real-world, objective data supporting DOD? How can we support OOP over DOD when it is based on subjective preferences, leading to design decisions that are somewhat arbitrary. Especially when you are trying to a ship a console game!

As I see it, the performance data that supports DOD should be supporting both arguments. The current generation of consoles in many ways are indicative of what future computing platforms will generally look like. Many cores. Slow memory. Long, simple instruction pipelines.

OOP has its positive qualities, but it is important to view it in context: it largely emerged as a means of improving the structure of large software systems by breaking software into singular, encapsulated objects. Whilst these concerns are significant, there are other, equally valid ways of addressing those concerns than OOP alone. And with the continuing, accelerating trend of parallel hardware, OOP alone cannot be positioned as the primary concern in designing software systems.

For me, my primary concern in software design is parallelism first, DOD second and OOP third.

Here's my take.

I could have picked any number of dichotomy based titles for this post. Objective vs Subjective. Pragmatism vs Idealism. Design vs Performance. PC vs Console. There is a tendency to view DOD and OOP as somewhat polar extremes that you are either for, or against. Personally, I see merit in both camps. It is something of a false dichotomy. I see value in the ideals of OOP and DOD. Equally, I see shortcomings in both camps. For me, it's not an either/or, it's a question of how you blend them together.

For me, the real key issue is choosing what approach to adopt for a given task. This is where the real challenge lies.

The arguments used to support OOP are essentially based on intangible qualities. Design. Re-use. Extensibility. Maintainability. Elegance. Whilst valuable, these are generally somewhat subjective qualities. What constitutes good design is based on widely held best practices, and those practices are based on a consensus of constantly evolving expert opinion. It's very hard to measure these things explicitly. It's very hard to say how much "better" a more OOP centric approach can make a piece of code.

The arguments for DOD, by contrast, are based on real-world, objective data: performance. You can directly measure performance, and directly state the improvement that a DOD approach has yielded for a given task. Similarly, you can measure the cost of an OOP based approach in terms of lost performance. Performance has a useful, marginal benefit in a software project. Other qualities have their benefits but are frequently less directly felt.

You therefore end up with a challenging situation. How can the intangible qualities that support OOP argue against the real-world, objective data supporting DOD? How can we support OOP over DOD when it is based on subjective preferences, leading to design decisions that are somewhat arbitrary. Especially when you are trying to a ship a console game!

As I see it, the performance data that supports DOD should be supporting both arguments. The current generation of consoles in many ways are indicative of what future computing platforms will generally look like. Many cores. Slow memory. Long, simple instruction pipelines.

OOP has its positive qualities, but it is important to view it in context: it largely emerged as a means of improving the structure of large software systems by breaking software into singular, encapsulated objects. Whilst these concerns are significant, there are other, equally valid ways of addressing those concerns than OOP alone. And with the continuing, accelerating trend of parallel hardware, OOP alone cannot be positioned as the primary concern in designing software systems.

For me, my primary concern in software design is parallelism first, DOD second and OOP third.

Tuesday 22 March 2011

Sphere Tree Construction and Traversal in Haskell

Finally. I've finally finished writing and debugging my sphere tree construction code in Haskell.

It should have been so easy. Tree structures are very easily recursively defined which is ideal for functional languages like Haskell. Take your data, split it up, and build the children by recursion. Simple. Only it was anything but...

The logic of the task was simple enough. The main problem was debugging. This is the sort of task that is prone to simple mistakes that cause time-consuming debugging problems. When working in an imperative language, you can easily put down a breakpoint and inspect your data, or if you recur too much the stack blows up, or you can step through your code and see why you're getting stuck in loops. These strategies don't map well to Haskell.

What does work in Haskell is to start small, build up gradually and constantly verify your results. You end up with a development cycle that is fundamentally very similar to test driven development. You want to prove your code one little function at a time by feeding it controlled inputs and inspecting the outputs. In Haskell, your primary debugger is your brain - you need to think very carefully through your code and how your express your algorithms. Functional languages reduce the scope of creating simple bugs due to side effects, and Haskell in particular eliminates a lot of plumbing and housekeeping code. What's left is purely your algorithm - inspect it carefully, that is where your problem lies.

Bounding Volume Hierarchies

I considered a few approaches for my bounding volume hierarchy, and for now, I've settled on a fairly generic implementation of bounding volume hierarchies that currently is configured as a sphere tree.

Each tree node can optionally (Maybe) hold an object. Each node has a sphere, but that could later be generalised. The children are defined as a list, allowing me to switch using a quadtree, kdtree or octree type approach.

(Note: The code below is best copied and viewed in a real text editor. Also, I am not yet a Haskell expert, and there may be many superior ways to achieve these results. My purpose in writing is to share my experiences as a learner with other learners.)

data SphereTreeNode = SphereTreeNode { object :: Maybe Object, children :: [SphereTreeNode], boundingRadius :: Float, boundingCentre :: Vector } deriving (Show, Read)

Sphere Tree Construction

The tree itself is constructed using a fairly simple piece of Haskell:

-- Build up a sphere tree

buildSphereTree :: ([Object] -> [[Object]]) -> [Object] -> SphereTreeNode

buildSphereTree _ (obj : []) = SphereTreeNode (Just obj) [] nodeRadius nodeCentre

where

nodeCentre = calculateMeanPosition (obj:[])

nodeRadius = calculateBoundingRadius (obj:[]) nodeCentre

buildSphereTree builder (obj:objs)

| length (obj:objs) == 1 = error "Should have been handled by a different pattern"

| length (obj:objs) == 0 = error "Should not have zero objects"

| otherwise = SphereTreeNode Nothing nodeChildren nodeRadius nodeCentre

where

nodeCentre = calculateMeanPosition (obj:objs)

nodeRadius = calculateBoundingRadius (obj:objs) nodeCentre

nodeChildren = map (buildSphereTree builder) (builder (obj:objs))

buildSphereTree _ [] = error "Should not hit this pattern for buildSphereTree"

It should have been so easy. Tree structures are very easily recursively defined which is ideal for functional languages like Haskell. Take your data, split it up, and build the children by recursion. Simple. Only it was anything but...

The logic of the task was simple enough. The main problem was debugging. This is the sort of task that is prone to simple mistakes that cause time-consuming debugging problems. When working in an imperative language, you can easily put down a breakpoint and inspect your data, or if you recur too much the stack blows up, or you can step through your code and see why you're getting stuck in loops. These strategies don't map well to Haskell.

What does work in Haskell is to start small, build up gradually and constantly verify your results. You end up with a development cycle that is fundamentally very similar to test driven development. You want to prove your code one little function at a time by feeding it controlled inputs and inspecting the outputs. In Haskell, your primary debugger is your brain - you need to think very carefully through your code and how your express your algorithms. Functional languages reduce the scope of creating simple bugs due to side effects, and Haskell in particular eliminates a lot of plumbing and housekeeping code. What's left is purely your algorithm - inspect it carefully, that is where your problem lies.

Bounding Volume Hierarchies

I considered a few approaches for my bounding volume hierarchy, and for now, I've settled on a fairly generic implementation of bounding volume hierarchies that currently is configured as a sphere tree.

Each tree node can optionally (Maybe) hold an object. Each node has a sphere, but that could later be generalised. The children are defined as a list, allowing me to switch using a quadtree, kdtree or octree type approach.

(Note: The code below is best copied and viewed in a real text editor. Also, I am not yet a Haskell expert, and there may be many superior ways to achieve these results. My purpose in writing is to share my experiences as a learner with other learners.)

data SphereTreeNode = SphereTreeNode { object :: Maybe Object, children :: [SphereTreeNode], boundingRadius :: Float, boundingCentre :: Vector } deriving (Show, Read)

Sphere Tree Construction

The tree itself is constructed using a fairly simple piece of Haskell:

-- Build up a sphere tree

buildSphereTree :: ([Object] -> [[Object]]) -> [Object] -> SphereTreeNode

buildSphereTree _ (obj : []) = SphereTreeNode (Just obj) [] nodeRadius nodeCentre

where

nodeCentre = calculateMeanPosition (obj:[])

nodeRadius = calculateBoundingRadius (obj:[]) nodeCentre

buildSphereTree builder (obj:objs)

| length (obj:objs) == 1 = error "Should have been handled by a different pattern"

| length (obj:objs) == 0 = error "Should not have zero objects"

| otherwise = SphereTreeNode Nothing nodeChildren nodeRadius nodeCentre

where

nodeCentre = calculateMeanPosition (obj:objs)

nodeRadius = calculateBoundingRadius (obj:objs) nodeCentre

nodeChildren = map (buildSphereTree builder) (builder (obj:objs))

buildSphereTree _ [] = error "Should not hit this pattern for buildSphereTree"

-- Find the mean of a collection of objects

calculateMeanPosition' :: [Object] -> Vector -> Vector

calculateMeanPosition' (obj : objects) acc = calculateMeanPosition' objects acc + (getCentre obj)

calculateMeanPosition' [] acc = acc

calculateMeanPosition :: [Object] -> Vector

calculateMeanPosition objects = setWTo1 ((calculateMeanPosition' objects zeroVector) fromIntegral (length objects))

-- Find the overall bounding radius of a list of objects

calculateBoundingRadius :: [Object] -> Vector -> Float

calculateBoundingRadius objs centre = foldr Prelude.max 0 (map (\obj -> shapeBoundingRadius (shape obj) (transform obj) centre) objs)

This object simply recursively builds a tree until it encounters an object list of size 1.

I've deliberately exercised a couple of Haskell idioms here. I've used another tail-recursive loop to calculate the mean centre of the objects, and a map/fold pair to calculate the sphere's bounding radius.

The most interesting part is the first parameter to buildSphereTree. I pass in a function of type ([Object] -> [[Object]]). This function is responsible for dividing the object list into a number of lists, each of which is the object list used to build a new child node. This trick allows me to abstract out the specific algorithm for building the tree into a user-supplied function.

In my current code, I'm using a simple KD-type approach:

-- Generate a plane to split the objects along

makeSplittingPlane :: AABB -> (Vector, Float)

makeSplittingPlane (boxMin, boxMax) = case largestAxis (boxMax - boxMin) of

0 -> (xaxis, -(vecX midPoint))

1 -> (yaxis, -(vecY midPoint))

2 -> (zaxis, -(vecZ midPoint))

_ -> error "Undefined value"

where

midPoint = (boxMin + boxMax) <*> 0.5

-- Make children using a kd tree

generateSceneGraphUsingKDTree :: [Object] -> [[Object]]

generateSceneGraphUsingKDTree objs = leftObjects : rightObjects : []

where

objBox = objectListBoundingBox objs

(planeNormal, planeDist) = makeSplittingPlane objBox

(leftObjects, rightObjects) = partition (\obj -> planeDist + (dot3 planeNormal $ getCentre obj) > 0) objs

We simply make a splitting plane and split the list of objects according to what side of the plane their centre lies: [[Object], [Object]].

I could easily replace this function with an alternative strategy. Or even, make a function that returns a function based on the characteristics of the presented list...

Bounding Volume Hierarchy Intersection

Now this is the real meat of the implementation. The function again takes the form of a tail-recursive loop. The code is essentially similar to implementing a recursive function using a software stack in C. The tail recursion is used to make a simple loop-type recursion rather than a true state-accumulation call-type recursion.

The code is somewhat complex as several optimisations have been added.

The code is passed a list of nodes to process. We process the first node in the list, and recur with the remainder of the list - and possibly, some newly added nodes. The code seeks to find and maintain the closest-intersecting object.

The code constructs a tuple of three values - a result for this object, a list of additional nodes to process and a ray. If an object or bounding volume is hit, this tuples holds new values. If no intersection is found, we simply continue with the existing state passed into the function.

The code first considers whether the given node has any children; if it has none, it only makes sense to test the object that the node should hold. We report an error if the node has no object (a node without children or objects is useless!).

If the node does have children, it holds a subtree we wish to accept or reject. We first test the bounding volume. If this is rejected, we simply retain the current results of the loop with no additional nodes to process. If the bounding volume is intersected, then we will at least recur to the children nodes. If the node contains an object and it is intersected, we update our current intersection results and shorten our ray.

Finally, we pattern match the empty array case to simply return the current results.

Here is the code:

intersectSphereTree :: [SphereTreeNode] -> Ray -> Maybe (Object, Float, Int) -> Maybe (Object, Float, Int)

intersectSphereTree (node:nodes) ray currentHit = intersectSphereTree (newNodeList ++ nodes) newRay thisResult

where

-- Intersect the ray with the bounding volume of this node

(thisResult, newNodeList, newRay) = case children node of

-- If the node has no children, don't bother with it's bounding volume and just check the object (if it has one)

[] -> case object node of

Nothing -> error "A node with no children should hold an object"

Just obj -> case shapeIntersect (shape obj) ray (transform obj) of

-- Didn't hit the object. Retain the current hit, and continue with remaining nodes on the list

Nothing -> (currentHit, [], ray)

-- We did hit this object. Update the intersection, and continue with remaining nodes on the list

Just (objHitDistance, objHitId) -> (Just (obj, objHitDistance, objHitId), [], shortenRay ray objHitDistance)

-- We have children. In this case it makes sense to test our bounding volume

nodeChildren -> case shapeIntersect (Sphere (boundingRadius node)) ray (translationMatrix' (boundingCentre node)) of -- (make a sphere centred at the object's transform matrix with given radius)

-- If we do not find an intersection, we do not update the results and we offer no further nodes to be traversed, thus skipping this subtree

Nothing -> (currentHit, [], ray)

-- If we do find an intersection against the bounding volume, then we try again against the actual object (if present)

Just (_, _) -> case object node of

Nothing -> (currentHit, nodeChildren, ray) -- No object; just pass to the children

Just obj -> case shapeIntersect (shape obj) ray (transform obj) of

-- Didn't hit the object. Retain the current hit, but offer up the children of the node as we hit the bounding volume

Nothing -> (currentHit, nodeChildren, ray)

-- We did hit this object. Update the intersection, and continue with the bounding volume's children

Just (objHitDistance, objHitId) -> (Just (obj, objHitDistance, objHitId), nodeChildren, shortenRay ray objHitDistance)

intersectSphereTree [] _ currentHit = currentHit

The striking thing about this code is that it steadily marches to the right of the screen, with each indentation being a new test of success or failure. Ideally, this code could be rewritten as a monad.

What About Infinite Objects?

They're simply "filter"ed out at the time of the sphere tree construction, and added to a separate list. It makes little sense to include an infinite object into any bounding volume hierarchy.

Results

The code continues to render my test scene as expected. Disappointingly, it is currently a little slower; however, this is not due to the scene graph code. I have recently rewritten my vector classes to be instances of the Num typeclass, so that I can simply write a + b, rather than coining new operators such as a <+> b. I haven't yet fully optimised my new code.

Other Observations

As I get further into Haskell, I'm finding myself increasingly thinking in terms of Lambda calculus. I no longer find myself thinking of what iterative steps I need to do to complete an operation, rather I find myself thinking in terms of the transformations of the underlying data and accompanying functions.